DeepPlanning: Benchmarking Long-Horizon Agentic Planning with Verifiable Constraints

Abstract

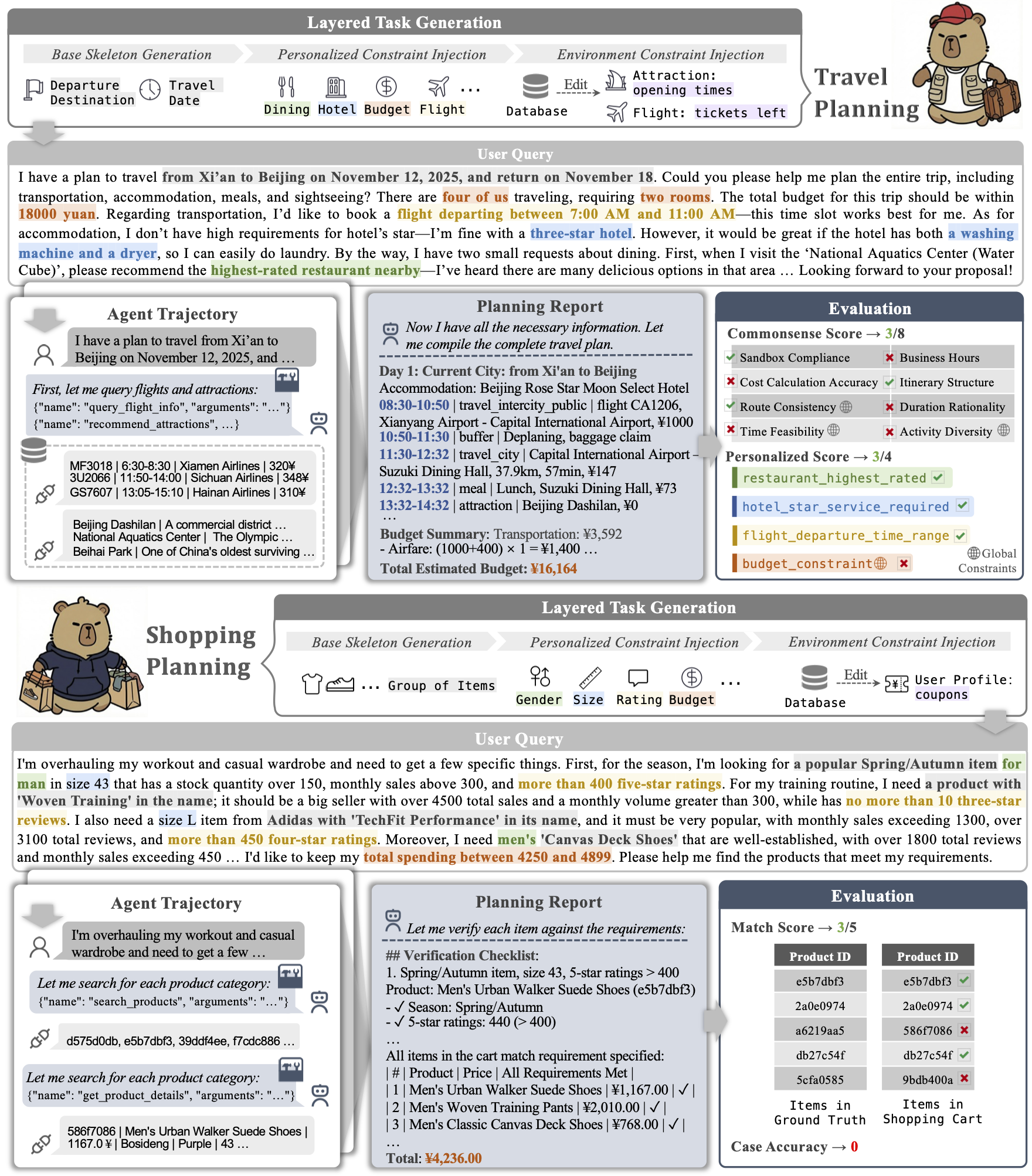

While agent evaluation has shifted toward long-horizon tasks, most benchmarks still emphasize local, step-level reasoning rather than the global constrained optimization (e.g., time and financial budgets) that demands genuine planning ability. Meanwhile, existing LLM planning benchmarks underrepresent the active information gathering and fine-grained local constraints typical of real-world settings. To address this, we introduce DeepPlanning, a challenging benchmark for practical long-horizon agent planning. It features multi-day travel planning and multi-product shopping tasks that require proactive information acquisition, local constrained reasoning, and global constrained optimization. Evaluations on DeepPlanning show that even frontier agentic LLMs struggle with these problems, highlighting the importance of reliable explicit reasoning patterns and parallel tool use for achieving better effectiveness-efficiency trade-offs. Error analysis further points to promising directions for improving agentic LLMs over long planning horizons. We open-source the code and data to support future research.

📊 Benchmark Details

DeepPlanning features two realistic, long-horizon domains that require agents to navigate complex environments with strict Verifiable Global Constraints.

📉 Statistics at a Glance

| Metric | ✈️ Travel Planning | 🛒 Shopping Planning |

|---|---|---|

| Tasks | 120 (ZH) / 120 (EN) | 120 (EN) |

| Toolkits | 9 Specialized APIs | 15 Specialized APIs |

| Data Volume | 7,708 records / task | 171 records / task |

| Primary Goal | Minute-level itinerary | Optimized shopping list |

| Environment | Isolated Python Sandbox | Isolated Python Sandbox |

✈️ Domain 1: Travel Planning

Agents act as personal travel assistants to organize multi-day trips where time, location, and budget are tightly coupled.

- Input: Natural language query (destination, dates, budget) and specific preferences (e.g., “3-star hotel with a dryer”).

- Tools: 9 APIs for searching flights, trains, hotels, restaurants, and attractions.

- Output: A structured planning report with itemized costs and a minute-by-minute schedule.

- Core Skill: Spatio-temporal reasoning—ensuring flight times, attraction hours, and transit durations all align without overlaps or budget overruns.

🛒 Domain 2: Shopping Planning

Agents must solve a combinatorial optimization problem to find the best products while maximizing discount utility.

- Input: Shopping lists with detailed attribute requirements and total budget limits.

- Tools: 15 APIs for semantic search, multi-attribute filtering, and coupon management.

- Output: A structured JSON cart containing the optimal set of products and applied coupons.

- Core Skill: Combinatorial Optimization—calculating complex coupon stacking rules (e.g., cross-store vs. same-brand) to achieve the absolute lowest final price.

🧠 Core Planning Competencies

DeepPlanning evaluates three critical agentic abilities:

-

Proactive Information Acquisition: Actively calling APIs to discover hidden environment states (e.g., checking if an attraction is closed or a product is in stock) instead of hallucinating facts.

-

Local Constrained Reasoning: Satisfying step-level logic, such as matching specific brands, sizes, or hotel amenities requested by the user.

-

Global Constrained Optimization: Managing holistic boundaries—like total budget caps and multi-day time feasibility—where a single local mistake invalidates the entire plan.

🏆 Leaderboard 🏆

Comprehensive evaluation results on DeepPlanning. Results are averaged over four runs. Bold indicates the best result.

| Rank | Model | Avg Acc. | Travel Planning | Shopping Planning | ||||

|---|---|---|---|---|---|---|---|---|

| CS Score | PS Score | Comp Score | Case Acc. | Match Score | Case Acc. | |||

| 1 | 44.6 | 88.5 | 83.3 | 85.8 | 35.0 | 84.8 | 54.2 | |

| 2 | 33.9 | 79.3 | 70.9 | 75.1 | 22.7 | 80.0 | 45.0 | |

| 3 | 31.6 | 78.7 | 65.9 | 72.3 | 18.9 | 80.4 | 44.2 | |

| 4 | 28.8 | 67.1 | 57.7 | 62.4 | 5.9 | 80.6 | 51.7 | |

| 5 | 28.7 | 64.0 | 61.7 | 62.8 | 13.8 | 82.6 | 43.5 | |

| 6 | 26.3 | 67.5 | 58.8 | 63.1 | 6.7 | 82.2 | 45.8 | |

| 7 | 25.5 | 65.2 | 58.4 | 61.8 | 7.6 | 80.0 | 43.3 | |

| 8 | 24.9 | 76.5 | 55.6 | 66.1 | 11.3 | 76.9 | 38.5 | |

| 9 | 23.2 | 58.4 | 25.1 | 41.8 | 0.7 | 78.0 | 45.8 | |

| 10 | 21.6 | 47.4 | 35.0 | 41.2 | 0.7 | 78.8 | 42.5 | |

| 11 | 20.4 | 43.6 | 56.7 | 50.1 | 0.0 | 77.5 | 40.8 | |

| 12 | 17.2 | 57.1 | 37.7 | 47.4 | 2.7 | 74.0 | 31.7 | |

| 13 | 17.2 | 53.4 | 42.8 | 48.1 | 1.1 | 75.8 | 33.3 | |

| 14 | 17.1 | 35.4 | 22.4 | 28.9 | 0.0 | 73.3 | 34.1 | |

| 15 | 17.0 | 62.3 | 42.0 | 52.2 | 3.2 | 69.1 | 30.8 | |

| 16 | 14.0 | 44.0 | 44.6 | 44.3 | 0.4 | 72.5 | 27.5 | |

| 17 | 12.8 | 36.7 | 30.7 | 31.8 | 0.8 | 70.2 | 24.7 | |

| 18 | 12.4 | 58.0 | 36.6 | 47.2 | 3.0 | 69.1 | 21.7 | |

| 19 | 12.1 | 45.2 | 32.5 | 38.9 | 0.0 | 65.8 | 24.2 | |

| 20 | 11.3 | 43.0 | 47.5 | 45.3 | 0.0 | 68.1 | 22.5 | |

| 21 | 7.5 | 37.3 | 13.0 | 25.1 | 0.0 | 63.9 | 15.0 | |

| 22 | 7.1 | 38.9 | 22.5 | 30.7 | 0.0 | 61.2 | 14.2 | |

| 23 | 5.3 | 37.4 | 12.1 | 24.7 | 0.0 | 58.3 | 10.6 | |

| 24 | 4.5 | 54.3 | 29.9 | 42.1 | 0.4 | 58.6 | 8.6 | |

| 25 | 3.0 | 39.6 | 19.7 | 29.6 | 0.0 | 50.1 | 5.9 | |

CS Score = Commonsense Score | PS Score = Personalized Score | Comp Score = Composite Score | Case Acc. = Case Accuracy | Match Score = Match Score. Bold values indicate best performance per category.

Acknowledgments

We thank Fliggy (飞猪) and Amap (高德) for their technical support.